LLMExecutor

Overview

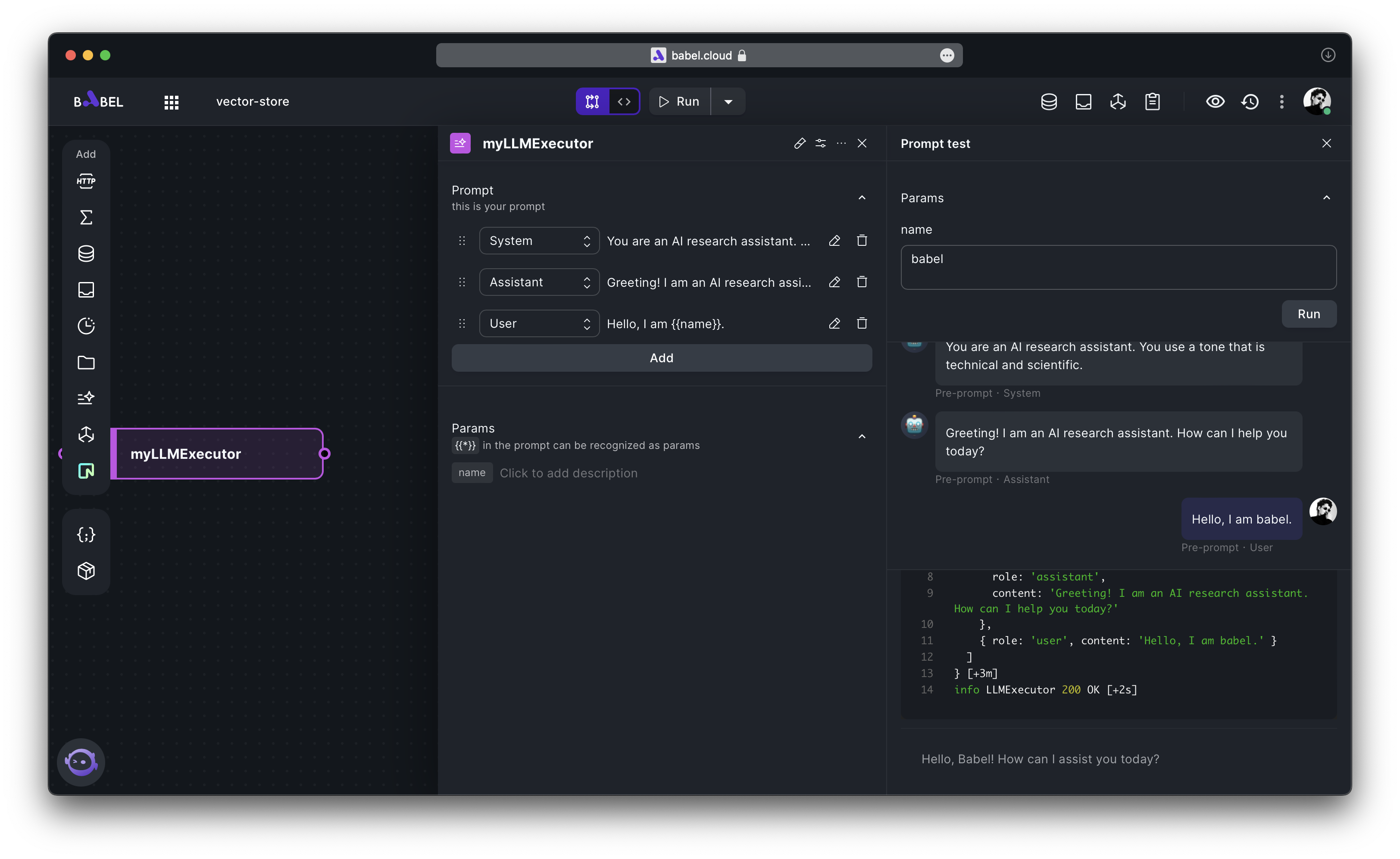

LLMExecutor, which stands for Large Language Model Executor, is responsible for passing prompts to the Large Language Model interface for processing.

Features

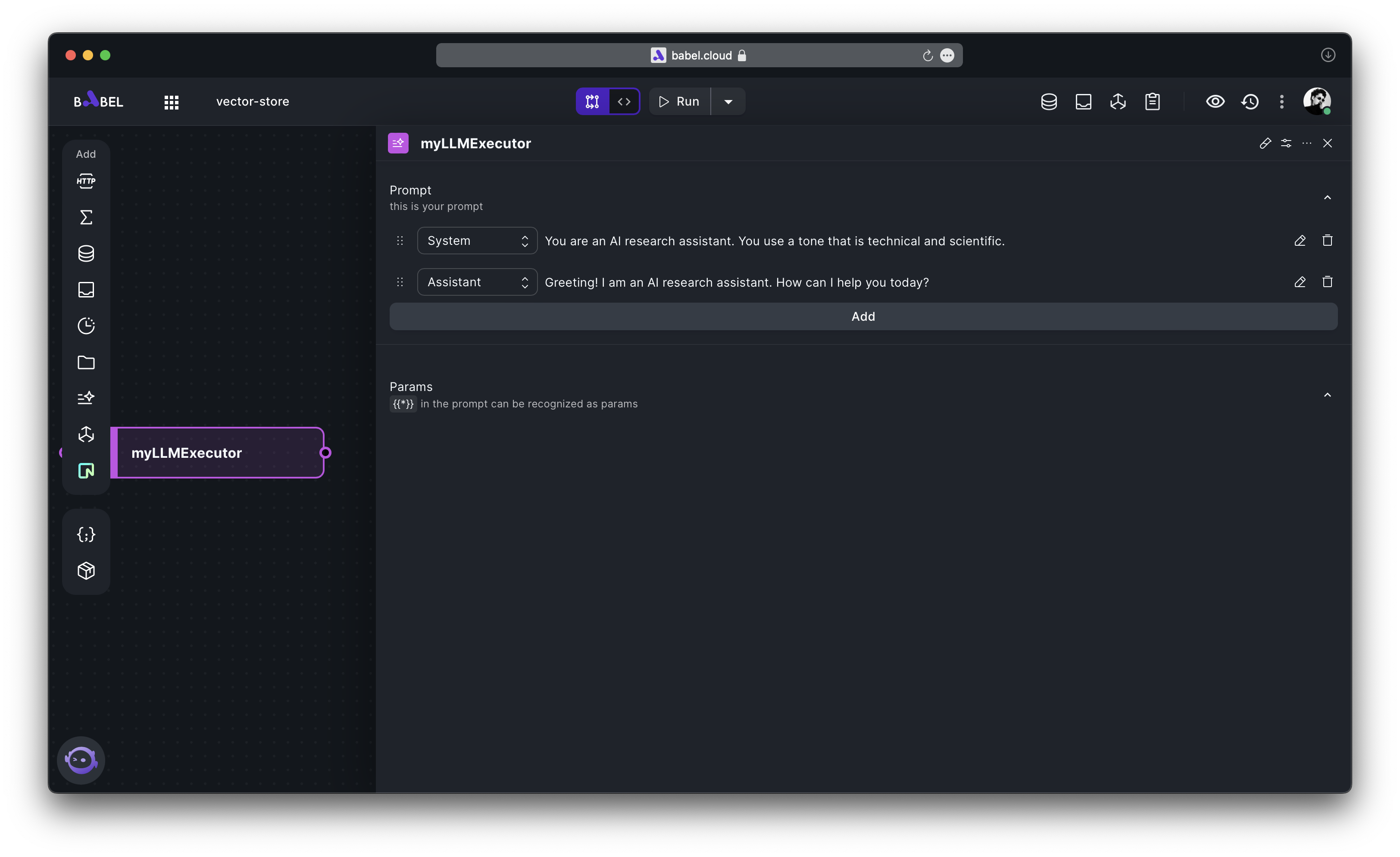

Prompt template

LLMExecutor provides prompt templates, allowing you to embed various types of messages, including those from the system, user, and assistant.

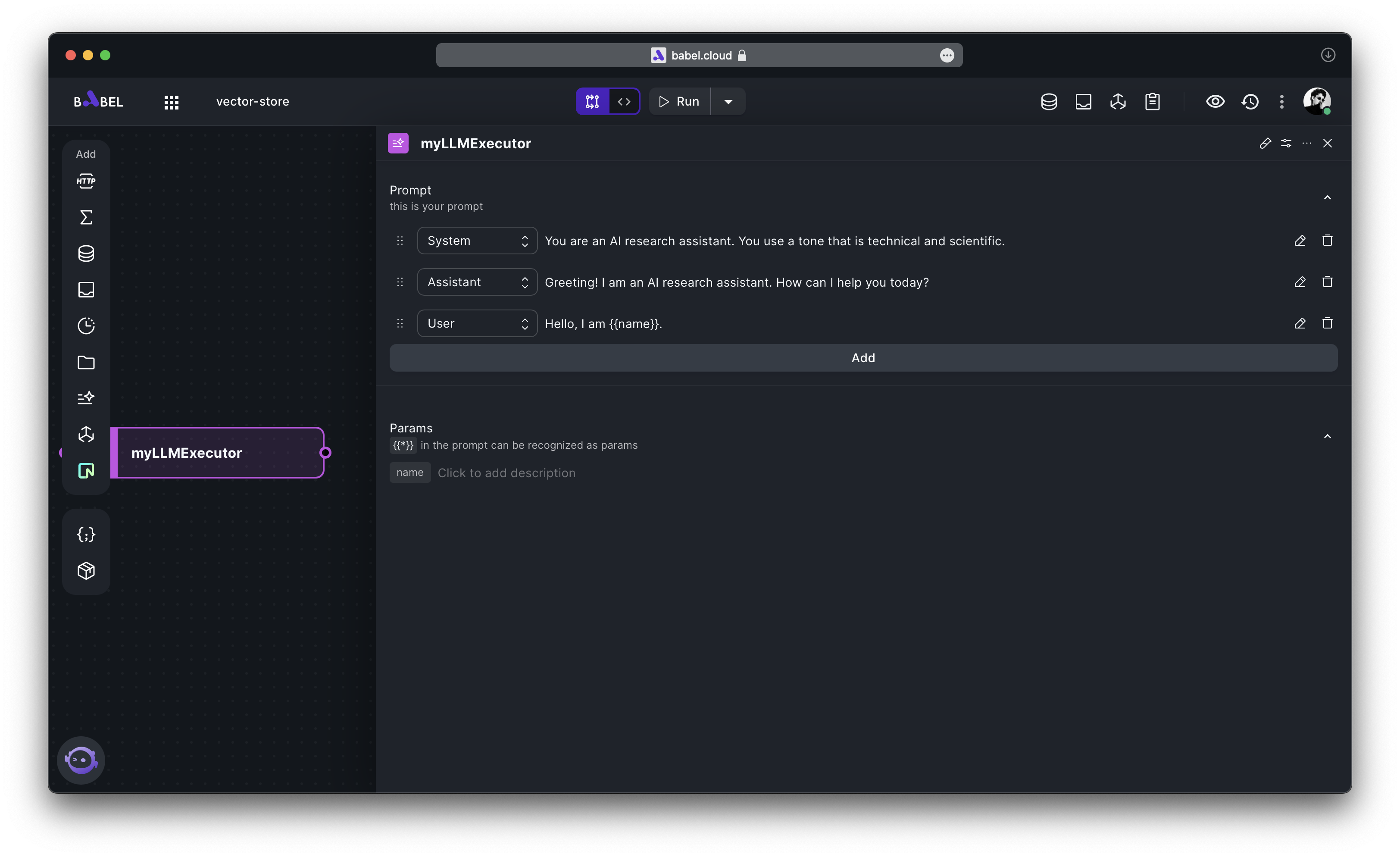

Prompt Params

Within messages, you can define a variable using double curly braces ( {{}} ). Then, by setting Params during runtime, you can assign values to these variables, thereby enabling the dynamic capability of prompts.

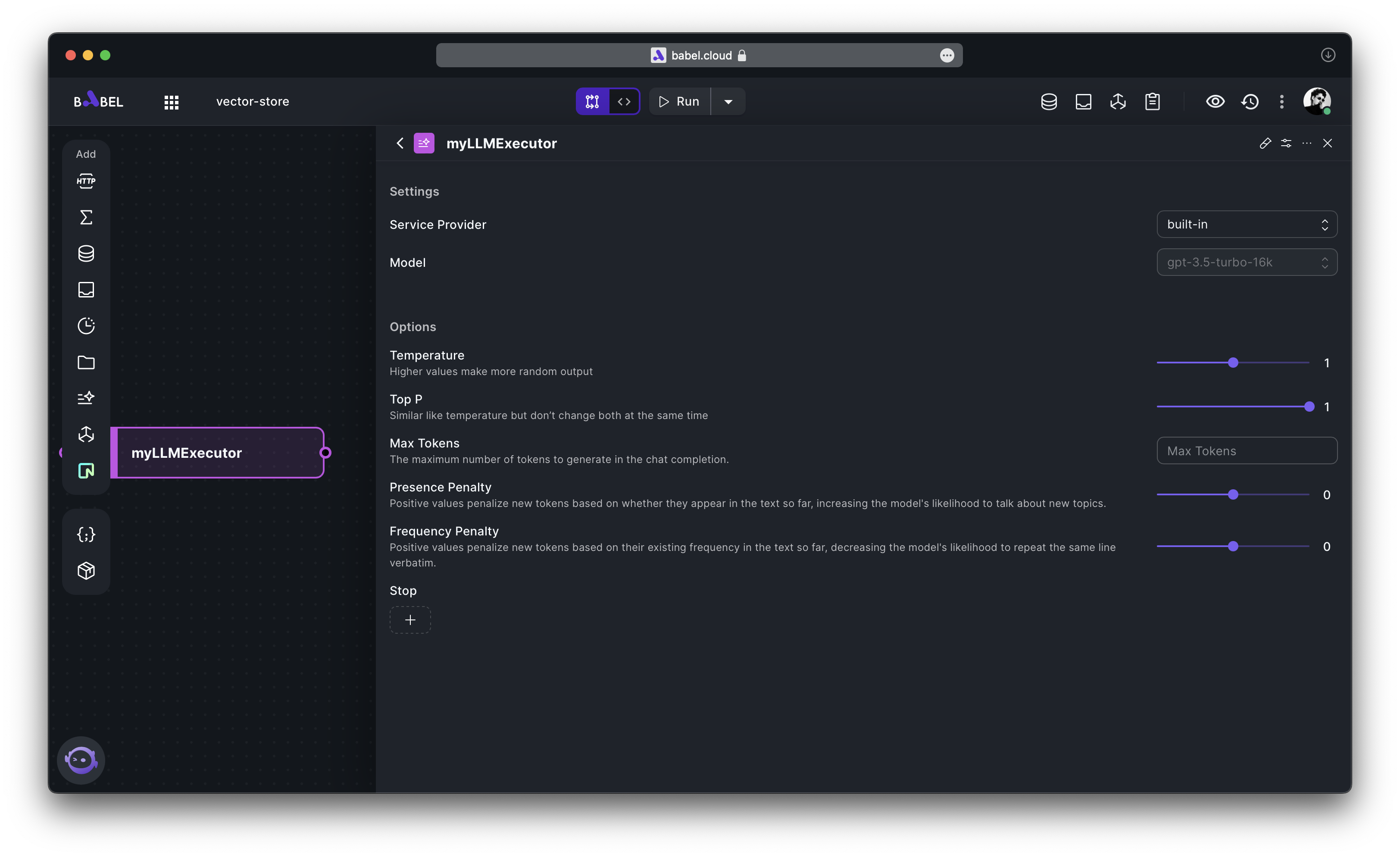

Service Provider

You need to configure a service provider to communicate with the Large Language Model interface. Babel includes a built-in service provider that offers a certain amount of free usage every month for each user. This can be utilized to explore the capabilities of the LLMExecutor Element.

Currently, the built-in service provider only supports the gpt-3.5-turbo-16k model.

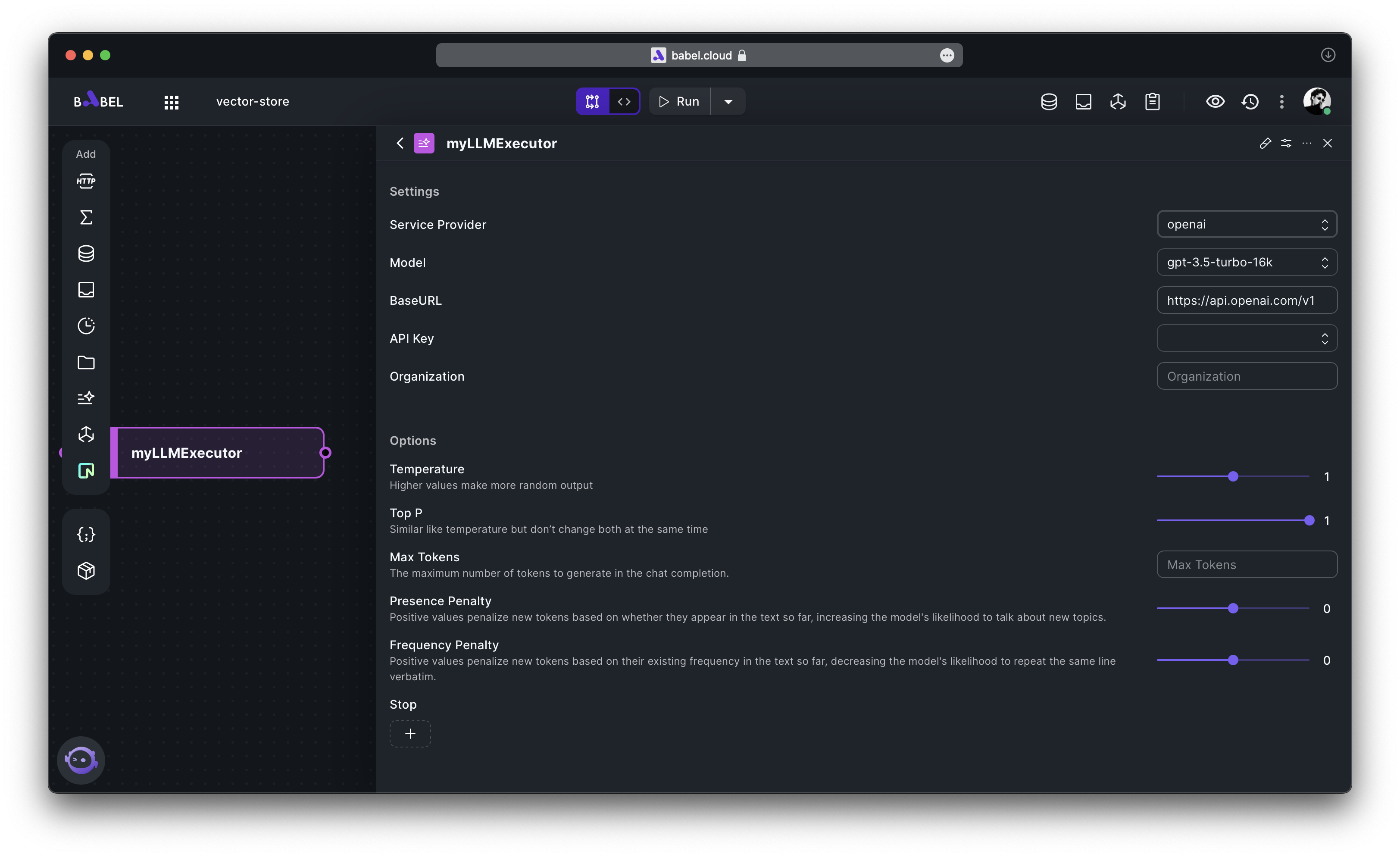

For serious applications, it is recommended to use the OPENAI service provider.

Access

Access in the Prompt test

Once you have finished crafting your prompt, you can test it.

Access in the LLMExecutor SDK

If you need to access LLMExecutor Element within your code, please refer to the LLMExecutor SDK section.